It’s been quite fascinating to watch the Customer Data Platform industry develop over the past few years. So far, we’ve seen two main trends emerge: extension of CDP product scope beyond the core of building the customer database itself and expansion into new industries. Both shed light on what we can expect to happen next.

Product Scope

CDP product scope has a more convoluted history than you might think. When the CDP concept was first defined, it referred to systems that built a customer database to support a specific marketing application such as predictive modeling or campaign management. It took some time for the vendors to realize that the database itself was ultimately more valuable than any one application, because the database was more central to their clients’ needs. At the same time, other people – often marketers who had found through personal experience that unified customer data was rarely available – started by build database-only CDPs, based on the same insight that the value was in the database.

The junction of these two streams is one reason that CDPs have always been so bafflingly diverse: some products started with a footprint that included applications, while others started with the database alone. Another reason is that CDPs also included third stream of vendors: tag managers whose original focus was on building connectors to ingest and distribute data.

But the nature of software products is to expand their functions. A cynic might argue the reason is that companies have developers on staff and need to keep them busy. But a more realistic explanation is that clients are always asking for new features and vendors are eager to oblige. What this means for CDPs is that even vendors who started out simply assembling data or building databases have added applications, typically starting with analytical features such as segmentation, visualization, and predictive modeling, and then moving further towards execution with message selection and experience orchestration. The step after that is message delivery – and, sure enough, we’re starting to see CDPs with email engines as well.

This raises new definitional challenges, since at some point a system that is actively executing customer experiences is clearly more than a CDP. I personally have no problem with this, having argued some time ago that we should distinguish between CDP functions, which can be part of a larger product, and CDP systems, which focus on building a CDP only. We’re seeing an increasing number of products that include CDP functions in a larger package, including offerings from Oracle and Adobe. Think of it as "CDP inside".

The trick will be for buyers to understand that whether a customer database is a stand-alone product or a component of something bigger, it’s only a CDP if it meets the definition: packaged software that builds a unified persistent customer database that is accessible to other systems. Oddly enough, “accessible to other systems” turns out to be the most critical element, because many vendors build a CDP-style database to support their own applications and don't share it with others. So, one of my major tasks in the year ahead will be to stress this point to anyone who will listen. The message will be very much along the lines of the original “Intel Inside” campaign: “insist on the real thing – accept no substitutes”. It’s a dauntingly subtle message to convey in a world where attention spans are measured in seconds. I like to think of it as a challenge.

New Industries

Most early CDPs were deployed at retail and publishing companies. Financial services and travel/hospitality came next, and adoption has recently spread to B2B, healthcare, education, and telco. In itself, this progression isn’t news. But I just recently saw a presentation by CDP vendor Boxever that suggested the sequence was more than random. They pointed out that adoption came earliest in industries with the shortest, most transactional buying cycles, and then spread quite steadily to industries with longer cycles and higher product costs.

This may seem obvious in hindsight, but it’s not the only possible explanation. My previous view was retail and publishing were early adopters because they had such poor systems in place before CDPs, making the incremental benefit higher than in banking and travel, here customer data was already fairly well organized. You could also argue that retail and publishing they’re the industries under the most competitive pressure from online companies, and thus with the greatest need for customer data to deliver personalized experiences. But those explanations always felt a bit contrived and ran into the fact that retail, financial, travel, and telecommunications had always been the leading industries in customer data-driven marketing. So, it always struck me as odd that they were adopting CDP at such different rates.

On the other hand, viewing CDP utility as a function of buying cycle does make sense. Companies with quick, simple transactions have more data points and simpler purchase processes than companies with fewer, longer running, more complicated transactions. This means those companies can more easily derive benefit from a CDP through tactical applications like predictive modeling to select lists or recommend a next offer. The other industries can still benefit from unified customer data but will need deeper analytics to convert their data into value.

A corollary may be that vertical specialists will have higher success rates in these late-adopting industries because their greater complexity requires specialized applications that only industry experts can build and explain. Many of these applications with require tight integration with industry operational systems, such as ticketing in travel, call details in telecommunications, and medical records in health care. B2B might seem an exception but that’s only because its specialized systems are marketing automation and sales automation, which are very familiar to many marketing technologists.

In any event, if the correlation between CDP adoption and buying cycle complexity is valid, it's a useful tool for assessing how easily CDP vendors can penetrate new marketers. That is surely a helpful thing to have.

What Next?

While industry history is interesting, the question everyone really cares about is, What happens next? That CDP vendors will continue to expand their footprint is obvious. So is growth into new industries. Less clear is whether stand-alone CDPs will continue to thrive or “CDP inside” solutions will take over.

We are in fact already seeing a movement in the “CDP inside” direction. Leaders include the big marketing clouds and narrower vendors with roots in email and Web site personalization. Acquisitions are one sign that companies are expanding their capabilities and, sure enough, last year saw CDP acquisitions including Datorama, Treasure Data, and several smaller companies (Marketing G2, Datatrics, and Audiens).

So the best bet is that the CDP market will follow the same pattern as other markets, with best-of-breed products slowly replaced by integrated solutions, starting in the mid-market and working up into larger enterprises. At the very high end, CDPs with advanced data management technology may survive and even grow in the short term, but they’ll ultimately be pushed into a corner with dwindling market share. Some other vendors may carve out niches in data connectivity, identity resolution, or other specialized functions within the CDP stack. At the other extreme, vendors with broad functions might find success as integrated solutions, especially if they are specialists in a particular industry. The hardest position to maintain will be a data-focused CDP serving mid-size companies: those firms will face increasingly compelling competition from broad-scope vendors who offer a CDP as part of a larger product.

Whether this is good or bad news depends on where you sit. Late-to-market CDP vendors, especially data-focused firms lacking special technology, may find the window of opportunity has already shut. Companies with broad functions that are adding CDP features shouldn’t have a problem, although they may need to talk more about their applications and less about their internal CDP.

Buyers, on the other hand, stand to benefit from a wider range of systems that offer the core CDP benefits of unified, shareable customer data. What the CDP industry has accomplished is to establish the need for a unified, open customer database as a central component of every company’s marketing technology architecture. Marketers – and others who use customer data – must insist on solutions that fully meet that need in terms of ingesting data from all sources, retaining full detail, and making thr results accessible to all external systems. Ensuring that solutions meet those conditions isn't easy: it’s hard for even experienced technology buyers to understand what different systems really do. Yet buyers have no choice but to be thorough in their evaluation processes: at most companies today, a proper CDP is a foundation for business success.

Sunday, December 16, 2018

Saturday, November 24, 2018

Oracle CX Unity Looks Like a Real Customer Data Platform

I finally caught up with Oracle for a briefing on the CX Unity product they announced in October. Although it was clear at the time that CX Unity offered some version of unified customer data, it was hard to understand exactly what was being delivered. The picture is now much clearer. Here are straight answers to important questions:

It’s a persistent database. CX Unity will ingest all types of data – structured, semi-structured, and unstructured – from Oracle’s own CX systems via prebuilt connectors and from external systems via APIs, batch imports, or Oracle’s integration cloud. It will store these in well-defined structures defined by marketing operations or similar lightly-technical users. The structures will include both raw data and derived variables such as predictive model scores. Oracle plans to release a dozen industry-specific data schemas including B2B and B2C verticals.

It does identity resolution. CX Unity will support deterministic matching for known relationships between customer identifiers and will maintain a persistent ID over time. It will link to the Oracle Identity Graph in the Oracle Data Cloud for probabilistic matches using third party data.

It activates data in near real time. CX Unity can ingest data in real time but it takes 15 to 20 minutes or longer to standardize, match, run models, and place it in accessible formats such as data cubes. Oracle expects that real-time interactions and triggers will run outside of CX Unity.

It shares data with all other systems. Oracle has built connectors to expose CX Unity data within its own customer-facing CX Cloud systems. APIs are available to publish data to other systems but it’s up to partners and clients to use them.

It integrates machine learning. CX Unity includes machine learning for predictive models and recommendations. Results are exposed to customer-facing systems. This capability seems to be what Oracle has in mind when it contrasts CX Unity with other customer data management solutions that it calls merely “database centric”.

It’s not live yet. The B2C customer segmentation features of CX Unity are available now. The full system is slated to be available in mid-2019.

These answers mean that CX Unity meets the definition of a Customer Data Platform: packaged software that creates a unified persistent customer database accessible by other systems. The machine learning and recommendation features would put it in the class of “personalization” CDPs I defined earlier this month. This is a sharp contrast with the CDP alternatives from Oracle’s main marketing cloud competitors: Customer 360 from Salesforce (no persistent database) and Open Data Initiative from Adobe, Microsoft and SAP (more of a standard than a packaged system).

It's likely that CX Unity will be bought mostly by current Oracle CX customers, although Oracle would doubtless be happy to sell it elsewhere. But even if CX Unity sales are limited, its feature list offers a template for buyers to measure other systems against. That will create a broader understanding of what belong in a customer management system and make it more likely that buyers will get a CDP that truly meet their needs. So its release is a welcome development – especially as Oracle finds ways to present it effectively.

It’s a persistent database. CX Unity will ingest all types of data – structured, semi-structured, and unstructured – from Oracle’s own CX systems via prebuilt connectors and from external systems via APIs, batch imports, or Oracle’s integration cloud. It will store these in well-defined structures defined by marketing operations or similar lightly-technical users. The structures will include both raw data and derived variables such as predictive model scores. Oracle plans to release a dozen industry-specific data schemas including B2B and B2C verticals.

It does identity resolution. CX Unity will support deterministic matching for known relationships between customer identifiers and will maintain a persistent ID over time. It will link to the Oracle Identity Graph in the Oracle Data Cloud for probabilistic matches using third party data.

It activates data in near real time. CX Unity can ingest data in real time but it takes 15 to 20 minutes or longer to standardize, match, run models, and place it in accessible formats such as data cubes. Oracle expects that real-time interactions and triggers will run outside of CX Unity.

It shares data with all other systems. Oracle has built connectors to expose CX Unity data within its own customer-facing CX Cloud systems. APIs are available to publish data to other systems but it’s up to partners and clients to use them.

It integrates machine learning. CX Unity includes machine learning for predictive models and recommendations. Results are exposed to customer-facing systems. This capability seems to be what Oracle has in mind when it contrasts CX Unity with other customer data management solutions that it calls merely “database centric”.

It’s not live yet. The B2C customer segmentation features of CX Unity are available now. The full system is slated to be available in mid-2019.

These answers mean that CX Unity meets the definition of a Customer Data Platform: packaged software that creates a unified persistent customer database accessible by other systems. The machine learning and recommendation features would put it in the class of “personalization” CDPs I defined earlier this month. This is a sharp contrast with the CDP alternatives from Oracle’s main marketing cloud competitors: Customer 360 from Salesforce (no persistent database) and Open Data Initiative from Adobe, Microsoft and SAP (more of a standard than a packaged system).

It's likely that CX Unity will be bought mostly by current Oracle CX customers, although Oracle would doubtless be happy to sell it elsewhere. But even if CX Unity sales are limited, its feature list offers a template for buyers to measure other systems against. That will create a broader understanding of what belong in a customer management system and make it more likely that buyers will get a CDP that truly meet their needs. So its release is a welcome development – especially as Oracle finds ways to present it effectively.

Labels:

cdp,

customer data management,

customer data platform,

martech

Sunday, November 18, 2018

Purpose-Driven Marketing Comes to Town

“If you can fake sincerity, you’ve got it made” runs the old joke. The irony-impaired managers of the Association of National Advertisers (ANA) seem to have taken this as serious advice, last week announcing creation of a “Center for Brand Purpose” that will help companies publicize their social purpose. Confirming the worst stereotypes of marketers as cynical hucksters, the press release promotes its mission with the argument that “purpose-led brands grow two to three times faster than their competitors.”

The ANA’s grasp of causation may be no stronger than its sense of morality, but there’s no question it's in tune with the marketing herd. Issue-based marketing is hot. Another announcement last week illustrates the point: a new program from a coalition of 2,600 socially-responsible “Certified B Corporations” such as Ben & Jerry’s aims to convince consumers to buy from companies that share their values.

Most of the B Corps have a legitimate history of activism. But the broader interest in taking social positions is intriguing precisely because the B Corps have been exceptions to the conventional wisdom that businesses should avoid controversial issues. Studies on the topic show mixed results1 and that most people care more about practical matters2. So why the sudden change?

The simple answer is Nike’s Colin Kaepernick ad, which was initially panned as hurting its image but was later reported to boost sales. Marketers being marketers, that was reason enough for many to try something similar.

But I think we can legitimately cite broader trends that have made marketers receptive to the shift. Fox News has demonstrated over the past twenty years that there’s a mass market for partisan bias. The growth of right-wing extremism has led to push-back in support of fairness, reason, and rule of law. The recent election results can be read as a majority rejection of extremism, although other interpretations are possible. If there is indeed an emerging consensus that American ideals are under threat, it’s now safer for companies to promote human rights, the environment, and fair employment practices: positions with broad public support despite attacks from the right.

There are other, more parochial reasons for some companies to take strong policy positions. Companies whose customers are concentrated on one or the other side of the urban/rural divide may benefit from polarizing choices: pro-Kaepernick for Nike, anti-abortion for Hobby Lobby. Industries with bad reputations may aim to change public opinion: oil companies touting their environmental concerns are a long-standing example (although this rarely extends to support for policies that would limit their profits).

The most intriguing current set of companies taking trying to change their reputations are the big tech firms which have quickly shifted from fan favorites to villains. Facebook has led the way, with seemingly endless privacy, hate speech, and election scandals resulting in a huge loss of public confidence and threats of government regulation. Other big tech firms haven’t been quite so widely criticized but broad concerns about the impact of tech on society are growing ever more common.

Tech companies are particularly susceptible to reputational damage because they need to recruit tech workers, who skew young, educated, urban, and immigrant. The best of those workers have many employment options and want to work at firms that agree with their values. Again, the problem is most severe for Facebook, whose own employees are increasingly concerned that it is doing more harm than good. But employee protests at Google , Amazon and Microsoft tell a similar story. The recent walkout by 20,000 Google workers over sexual harassment is one more example – and was followed by changes in policies at other tech-driven firms including eBay, Airbnb, and Facebook itself. On the other side of the ledger, Apple has made privacy protection a core part of its own brand, both calling for regulation and resisting government requests to share data. Not coincidentally, Apple’s business isn’t as dependent on consumer data as many of its competitors.

Which brings us back to the original topic. If brands are taking political positions because that’s a good marketing tactic, the insincerity seems reprehensible. It also opens the door to brands supporting socially harmful positions if those are the most popular. At best, expectations should be limited: no one expects a brand to support a policy that hurts its own business, and in fact we’re used to brands advocating policies that favor them. So any position taken by a business must be viewed with skepticism.3

On the other hand, businesses do have a fundamental self-interest in promoting healthy social, political and physical environments. Advocating policies that protect those environments is a perfectly legitimate activity. Publicizing that advocacy is part of the advocacy itself. That it’s now seen as good marketing isn’t new and it isn’t bad: it’s just how things are at this particular moment.

___________________________________________________________________________

1 Edelman, Sprout Social and Finn Partners show consumers want brands to lead on social issues. Morning Consult, Euclid, and Bambu found the opposite.

2 InMoment found 9% of consumers care mostly about brand purpose while 39% care mostly about functionality. The remainder care about both. Waggener Edstrom reports that 55% of consumers say brands can earn their trust by delivering what they promise while fewer than 10% said trust is earned by supporting shared values.

3 Not to mention that brands have been known to attack legitimate research to support their preferred positions, to secretly fund supporters and attacks on opponents, and to say one thing while doing another.

Labels:

marketing,

politics,

social purpose

Saturday, November 03, 2018

Why Are There So Many Types of Customer Data Platforms? It's Complicated.

I spend much of my time these days trying to explain Customer Data Platforms to people who suspect a CDP could help them but lack clear understanding of exactly what a CDP can do. At the end of our encounter they’re often frustrated: a simple definition of CDP still eludes them. The fundamental reason is that CDPs are not simple: the industry has rapidly evolved numerous subspecies of CDPs that are as different from each other as the different kinds of dinosaurs. Just as the popular understanding of dinosaurs – big, cold-blooded and extinct – has little to do with the scientific definition, a meaningful understanding of CDPs often has little to do with what people initially expect.

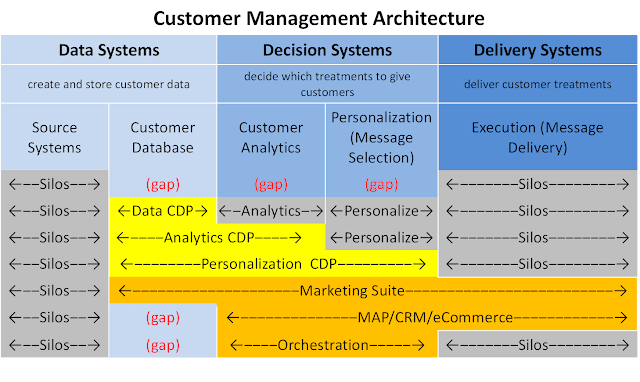

Let’s start with the big picture. I’ve for years divided customer management systems into three broad categories, which are best seen as layers in a unified architecture. Frequent readers of this blog can feel free to recite them along with me (throwing rice at the screen is optional): data, decisions, and delivery.

Refining this notion just a bit:, the data layer includes systems that create customer data and systems that store it in a unified customer database. Decision systems include several categories such as marketing planning and content management, but the most relevant here are analytics, including segmentation and predictive models, and personalization*, which selects the best message for each individual. The delivery layer holds both systems that send outbound messages such as email and advertising and interactive systems such as Web sites and call centers. An important point is it’s hard to do a good job of delivering messages, so delivery systems are large, complex products. Picking the right message is just one of many features and often developers’ main concern.

A complete architecture has entries in each of these five categories. But many companies have multiple source and delivery systems that are disconnected: these are the infamous silos. The core technology challenge facing today’s marketers can be viewed as connecting these silos by adding the customer database, analytics, and personalization components that sit in between.

By definition, the CDP fills the Customer Database gap. Some CDPs do only that – I will uncreatively label them as “Data CDPs”.

I’ll also take a slight detour to remind you that the customer database must be persistent – that is, it has to copy data from other systems and store it. This is necessary to track customers over time, since the source systems often don’t retain old identifiers (such as a previous mailing or email address) or, if they do keep them, don’t retain linkages between old identifiers and new ones. There’s also lots of other data that source systems discard once they have no immediate need for it, such as location, loyalty status or life-to-date purchases at the time of a transaction. Marketing and analytical systems may need these and it’s often not possible or practical to reconstruct them from what the source systems retained. This is especially true in situations where the data must be accessed instantly to support real time processes.

But I digress. Back to our Data CDP, which obviously leaves the additional gaps of analytics and personalization. Why wouldn’t a CDP fill those as well? One answer is that some CDPs do fill them: we’ll label CDPs with a customer database plus customer analytics as “Analytics CDPs” and those with a customer Database, analytics, and personalization as “Personalization CDPs”, again winning no prizes for creativity. A second answer is that some companies already have chosen tools they want for analytics or personalization. Like message delivery, those are complicated tasks that can easily be the sole focus of a “best of breed” product or products.

This variety of CDPs also addresses another question that some find perplexing: why one company might purchase more than one CDP. As you’d expect, different CDPs are better at some things than others. In particular, some systems are especially good at database building while others are good at analytics or personalization. It often depends on the origins of the product. The result is that a company might buy one CDP for its database features and have it send a unified feed into a second CDP for analytics and/or personalization. There are some extra cost and effort involved but in some situations they're worth it.

Are you still with me? I’ve presented three different types of CDPs but hope the differences in what they do and which you’d want are fairly clear.** Now comes the advanced course: other systems that either call themselves CDPs or offer CDP alternatives.

These fall into many categories but can all be mapped to the same set of five capabilities. Let’s start with Marketing Suites, by which I mean delivery systems that have expanded backwards to include a customer database, analytics and personalization. Many email vendors have done this and it’s increasingly common among Web personalization and mobile app marketing products. In most cases, these vendors now deliver across multiple channels. Adobe Experience Cloud also fits in here.

To qualify as a CDP, these systems would need to ingest data from all sources, maintain full input detail, and share the results with other systems. Many don’t, some do. We could easily add another CDP category to cover them – “Marketing Suite CDP” would work just fine. But this probably stretches the definition of CDP past the breaking point. For CDP to have any meaning, it must describe a system whose primary purpose is to build a persistent, sharable customer database. The primary purpose of delivery systems is delivery, something that’s hard to do well and will remain the primary focus of vendors who do it. So rather than over-extend the definition of CDP, let’s think of these as systems that include a customer database as one of their features.

We also have some easier cases to consider, which are systems that provide customer analytics and personalization but don’t build a unified customer database. Some of these also provide delivery functions – examples include marketing automation, CRM, and ecommerce platforms. Others don’t do delivery; we can label them as Orchestration. In both cases, the lack of a unified, sharable customer database makes it clear that they’re not CDPs. Complementing them with a CDP is an obvious option. So not much confusion there, at least.

Finally, we come to the Customer Experience Clouds: collections of systems that promise a complete set of customer-facing systems. Oracle and Salesforce are high on this list. Both of those vendors have recently introduced solutions (CX Unity and Customer 360) that are positioned as providing a unified customer view. It’s clear that Salesforce does this by accessing source data in real time, rather than creating a unified, persistent database. Oracle has been vague on the details but it looks like they take a similar approach. In other words, the reality for those systems shows a gap where the persistent customer database should be. Again, this makes CDPs an excellent complement, although the vendors might disagree.

So, there you have it. I won’t claim the answers are simple but do hope they’re a little more clear. All CDPs build a unified, persistent, sharable customer database. Some add analytics and personalization. If they extend to delivery, they're not a CDP. Systems that aren’t CDPs may also build a customer database but you have to look closely to ensure it’s unified, persistent and shareable. Often a CDP will complement other systems; in some cases, it might replace them.

Still disappointed? I am genuinely sorry. But if it helps bear this in mind: while simple answers are nice, correct answers—which in this case means getting a solution that fits your needs – are what matter most.

_______________________________________________

*I usually call this ‘engagement’ but think ‘personalization’ will be easier to understand in today’s context. For the record, I’m specifically referring here to selecting the best message on an individual-by-individual basis, which isn’t necessarily implied by ‘personalization’.

**If you want to know which CDP vendors fit into each category, the CDP Institute’s free Vendor Comparison report covers these and other differentiators. Products without automated predictive modeling can be considered Data CDPs; those having automated predictive but lacking multi-step campaigns could be considered Analytics CDPs; those with multi-step campaigns could be considered Personalization CDPs. There are many other nuances that could be relevant to your particular situation: the report lists 27 differentiators in all.

Let’s start with the big picture. I’ve for years divided customer management systems into three broad categories, which are best seen as layers in a unified architecture. Frequent readers of this blog can feel free to recite them along with me (throwing rice at the screen is optional): data, decisions, and delivery.

Refining this notion just a bit:, the data layer includes systems that create customer data and systems that store it in a unified customer database. Decision systems include several categories such as marketing planning and content management, but the most relevant here are analytics, including segmentation and predictive models, and personalization*, which selects the best message for each individual. The delivery layer holds both systems that send outbound messages such as email and advertising and interactive systems such as Web sites and call centers. An important point is it’s hard to do a good job of delivering messages, so delivery systems are large, complex products. Picking the right message is just one of many features and often developers’ main concern.

A complete architecture has entries in each of these five categories. But many companies have multiple source and delivery systems that are disconnected: these are the infamous silos. The core technology challenge facing today’s marketers can be viewed as connecting these silos by adding the customer database, analytics, and personalization components that sit in between.

By definition, the CDP fills the Customer Database gap. Some CDPs do only that – I will uncreatively label them as “Data CDPs”.

I’ll also take a slight detour to remind you that the customer database must be persistent – that is, it has to copy data from other systems and store it. This is necessary to track customers over time, since the source systems often don’t retain old identifiers (such as a previous mailing or email address) or, if they do keep them, don’t retain linkages between old identifiers and new ones. There’s also lots of other data that source systems discard once they have no immediate need for it, such as location, loyalty status or life-to-date purchases at the time of a transaction. Marketing and analytical systems may need these and it’s often not possible or practical to reconstruct them from what the source systems retained. This is especially true in situations where the data must be accessed instantly to support real time processes.

But I digress. Back to our Data CDP, which obviously leaves the additional gaps of analytics and personalization. Why wouldn’t a CDP fill those as well? One answer is that some CDPs do fill them: we’ll label CDPs with a customer database plus customer analytics as “Analytics CDPs” and those with a customer Database, analytics, and personalization as “Personalization CDPs”, again winning no prizes for creativity. A second answer is that some companies already have chosen tools they want for analytics or personalization. Like message delivery, those are complicated tasks that can easily be the sole focus of a “best of breed” product or products.

This variety of CDPs also addresses another question that some find perplexing: why one company might purchase more than one CDP. As you’d expect, different CDPs are better at some things than others. In particular, some systems are especially good at database building while others are good at analytics or personalization. It often depends on the origins of the product. The result is that a company might buy one CDP for its database features and have it send a unified feed into a second CDP for analytics and/or personalization. There are some extra cost and effort involved but in some situations they're worth it.

Are you still with me? I’ve presented three different types of CDPs but hope the differences in what they do and which you’d want are fairly clear.** Now comes the advanced course: other systems that either call themselves CDPs or offer CDP alternatives.

These fall into many categories but can all be mapped to the same set of five capabilities. Let’s start with Marketing Suites, by which I mean delivery systems that have expanded backwards to include a customer database, analytics and personalization. Many email vendors have done this and it’s increasingly common among Web personalization and mobile app marketing products. In most cases, these vendors now deliver across multiple channels. Adobe Experience Cloud also fits in here.

To qualify as a CDP, these systems would need to ingest data from all sources, maintain full input detail, and share the results with other systems. Many don’t, some do. We could easily add another CDP category to cover them – “Marketing Suite CDP” would work just fine. But this probably stretches the definition of CDP past the breaking point. For CDP to have any meaning, it must describe a system whose primary purpose is to build a persistent, sharable customer database. The primary purpose of delivery systems is delivery, something that’s hard to do well and will remain the primary focus of vendors who do it. So rather than over-extend the definition of CDP, let’s think of these as systems that include a customer database as one of their features.

We also have some easier cases to consider, which are systems that provide customer analytics and personalization but don’t build a unified customer database. Some of these also provide delivery functions – examples include marketing automation, CRM, and ecommerce platforms. Others don’t do delivery; we can label them as Orchestration. In both cases, the lack of a unified, sharable customer database makes it clear that they’re not CDPs. Complementing them with a CDP is an obvious option. So not much confusion there, at least.

Finally, we come to the Customer Experience Clouds: collections of systems that promise a complete set of customer-facing systems. Oracle and Salesforce are high on this list. Both of those vendors have recently introduced solutions (CX Unity and Customer 360) that are positioned as providing a unified customer view. It’s clear that Salesforce does this by accessing source data in real time, rather than creating a unified, persistent database. Oracle has been vague on the details but it looks like they take a similar approach. In other words, the reality for those systems shows a gap where the persistent customer database should be. Again, this makes CDPs an excellent complement, although the vendors might disagree.

So, there you have it. I won’t claim the answers are simple but do hope they’re a little more clear. All CDPs build a unified, persistent, sharable customer database. Some add analytics and personalization. If they extend to delivery, they're not a CDP. Systems that aren’t CDPs may also build a customer database but you have to look closely to ensure it’s unified, persistent and shareable. Often a CDP will complement other systems; in some cases, it might replace them.

Still disappointed? I am genuinely sorry. But if it helps bear this in mind: while simple answers are nice, correct answers—which in this case means getting a solution that fits your needs – are what matter most.

_______________________________________________

*I usually call this ‘engagement’ but think ‘personalization’ will be easier to understand in today’s context. For the record, I’m specifically referring here to selecting the best message on an individual-by-individual basis, which isn’t necessarily implied by ‘personalization’.

**If you want to know which CDP vendors fit into each category, the CDP Institute’s free Vendor Comparison report covers these and other differentiators. Products without automated predictive modeling can be considered Data CDPs; those having automated predictive but lacking multi-step campaigns could be considered Analytics CDPs; those with multi-step campaigns could be considered Personalization CDPs. There are many other nuances that could be relevant to your particular situation: the report lists 27 differentiators in all.

Sunday, October 07, 2018

How to Build a CDP RFP Generator

Recent discussions with Customer Data Platform buyers and vendors have repeatedly circled around a small set of questions:

1. defining a set of common CDP use cases

2. identifying the CDP capabilities required to support each use case

3. identifying the capabilities available in specific CDPs

4. having buyers specify which use cases they want to support

5. auto-generating an RFP that lists the requirements for the buyer’s use cases

6. creating a list of vendors whose capabilities match those requirements

What’s interesting is that steps 1-3 describe information that could be assembled once and used by all buyers, while steps 5 and 6 are purely mechanical. So only step 4 (picking use cases) requires direct buyer input. This means the whole process could be made quite easy.

(Actually, there’s one more bit of buyer input, which is to specify which capabilities they already have available in existing systems. Those capabilities can then be excluded from the RFP requirements. The capabilities list could also be extended to non-CDP capabilities, since most use cases will involve other systems that could have their own gaps. These nuances don’t change the basic process.)

As a sanity check, I’ve built a small Proof of Concept for this approach using an Excel spreadsheet. I'm happy to say it works quite nicely. I'll share a simplified version here to illustrate how it works. In particular, I'll show just a few capabilities, use cases, and (anonymous) vendors. .

We’ll start with the static data.

The columns are:

The second table looks at the items that depend on user input. The only direct user inputs are to choose which use cases apply (not shown here) and to indicate which capabilities already exist in current systems. All other items are derived from those inputs and the static data.

The columns are:

The main outputs of this process would be:

Beyond the Basics

We could further refine the methodology by assigning weights to different use cases, to capabilities within each use case, to existing capabilities, and to vendor capabilities. This would give a much more nuanced representation of real-world complexity. Most of this could be done within the existing framework by assigning fractions rather than ones and zeros to the tables shown above. I’m not sure how much added value users would get from the additional work, in particular given how uncertain many of the answers would be.

We could also write some rules to make general observations and recommendations based on the inputs, such as what to prioritize. We could even add a few more relevant questions to better assess the resources available to each user and further refine the recommendations. That would also be pretty easy and we could easily expand the outputs over time.

What’s Next?

But first things first. While I think I’ve solved the problem conceptually, the real work is just beginning. We need to refine the capability categories, create proper RFP language for each category, define an adequate body of use cases, map each use case to the required capabilities, create the RFP template, and research individual vendor capabilities. I’ll probably hold off on the last item because of the work involved and the potential impact of any errors.

Of course, we can refine all these items over time. The biggest initial challenge is transforming my Excel sheet into a functioning Web application. Any decent survey tool could gather the required input but I’m not aware of one that can do the subsequent processing and results presentation. A more suitable class of system would be the interactive content products used to generate quotes and self-assessments. There are lots of these and it will be a big project to sort through them. We’ll also be constrained by cost: anything over $100 a month will be a stretch. If anybody reading this has suggestions, please send me an email.

In the meantime, I’ll continue working with CDP Institute Sponsors and others to refine the categories, use cases, and other components. Again, anyone who wants to help out is welcome to participate.

This is a big project. But it directly addresses several of the key challenges facing CDP users today. I look forward to moving ahead.

- what are the use cases for CDP? (This really means, when should you use a CDP and when should you use something else?)

- what are the capabilities of a CDP? (This really means, what are the unique features I’ll find only in a CDP? It might also mean, what features do all CDPs share and which are found in some but not others?)

- which CDPs have which capabilities? (This really means, which CDPs match my requirements?)

- can someone create a standardized CDP Request for Proposal? (This comes from vendors who are now receiving many poorly written CDP RFPs.)

1. defining a set of common CDP use cases

2. identifying the CDP capabilities required to support each use case

3. identifying the capabilities available in specific CDPs

4. having buyers specify which use cases they want to support

5. auto-generating an RFP that lists the requirements for the buyer’s use cases

6. creating a list of vendors whose capabilities match those requirements

What’s interesting is that steps 1-3 describe information that could be assembled once and used by all buyers, while steps 5 and 6 are purely mechanical. So only step 4 (picking use cases) requires direct buyer input. This means the whole process could be made quite easy.

(Actually, there’s one more bit of buyer input, which is to specify which capabilities they already have available in existing systems. Those capabilities can then be excluded from the RFP requirements. The capabilities list could also be extended to non-CDP capabilities, since most use cases will involve other systems that could have their own gaps. These nuances don’t change the basic process.)

As a sanity check, I’ve built a small Proof of Concept for this approach using an Excel spreadsheet. I'm happy to say it works quite nicely. I'll share a simplified version here to illustrate how it works. In particular, I'll show just a few capabilities, use cases, and (anonymous) vendors. .

We’ll start with the static data.

The columns are:

- Capability: a system capability.

- Description: a description of the capability. This can both help users understand what it is and be a requirement in the resulting RFP. Or, we could create separate RFP language for each capability. This could go into more detail about the required features.

- CDP Feature: indicates whether the capability would be found in at least some CDPs. The CDP RFP can ignore features that aren't part of the CDP, but it's still important to identify them because they could create a gap that makes the use case impossible. For example, consider the first row in the sample table, whether the Web system can accept external input. This isn't a CDP feature but it's needed to deliver the use case for Real time web interactions.

- Use Cases: shows which capabilities are needed for which use case. For items that relate to a specific channel, each channel would be a separate use case. In the sample table, Single Source Access is specifically related to the Point of Sale channel while Real Time Interactions are specifically related to Web

- Vendor Capabilities: these indicate whether a particular vendor provides a particular capability.

The second table looks at the items that depend on user input. The only direct user inputs are to choose which use cases apply (not shown here) and to indicate which capabilities already exist in current systems. All other items are derived from those inputs and the static data.

The columns are:

- Nbr Use Cases Needing: this shows how many use cases require this capability. It’s the sum of the capability values for the selected use cases.

- Already Have: this is the user’s input, showing which of the required capabilities are already available. In the sample table, the last row (site tag) is an existing capability. Since it exists, you can leave it out of the RFP.

- Nbr Gaps: the number of use cases that need the capability, excluding capabilities that are already available. These are gaps. Using the number of cases, rather than a simple 1 or 0, provides some sense of how important it is to fill each gap.

- Nbr CDP Gaps: the number of gap use cases that might be enabled by a CDP. The first row iIn the example, Web – accept input (ability of a Web site to accept external input) isn’t a CDP attribute, so this value is set to zero.

- Gaps Filled by Vendor: the number of CDP Gaps filled by each vendor, based on the vendor capabilities. A total at the bottom of each column shows the sum for all capabilities for each vendor. This gives a rough indicator of which vendors are the best fit for a particular user.

The main outputs of this process would be:

- List of gaps, prioritized by how many use cases each gap is blocking and divided into gaps that a CDP could address and gaps that need to be addressed by other systems.

- List of CDP requirements, which is easily be transformed into an RFP. A complete RFP would have additional questions such as vendor background and pricing. But these are pretty much the same for all companies so they can be part of a standard template. The only other input needed from the buyer is information about her own company and goals. And even some goal information is implicit in the use cases selected.

- List of CDP vendors to consider, including which vendors fill which gaps and which have the best over-all fit (i.e., fill the most gaps). This depends on having complete and accurate vendor information and will be a sensitive topic with vendors who hate to be excluded from consideration before they can talk to a potential buyer. So it's something we might not do right away. But it’s good to know it’s an option.

Beyond the Basics

We could further refine the methodology by assigning weights to different use cases, to capabilities within each use case, to existing capabilities, and to vendor capabilities. This would give a much more nuanced representation of real-world complexity. Most of this could be done within the existing framework by assigning fractions rather than ones and zeros to the tables shown above. I’m not sure how much added value users would get from the additional work, in particular given how uncertain many of the answers would be.

We could also write some rules to make general observations and recommendations based on the inputs, such as what to prioritize. We could even add a few more relevant questions to better assess the resources available to each user and further refine the recommendations. That would also be pretty easy and we could easily expand the outputs over time.

What’s Next?

But first things first. While I think I’ve solved the problem conceptually, the real work is just beginning. We need to refine the capability categories, create proper RFP language for each category, define an adequate body of use cases, map each use case to the required capabilities, create the RFP template, and research individual vendor capabilities. I’ll probably hold off on the last item because of the work involved and the potential impact of any errors.

Of course, we can refine all these items over time. The biggest initial challenge is transforming my Excel sheet into a functioning Web application. Any decent survey tool could gather the required input but I’m not aware of one that can do the subsequent processing and results presentation. A more suitable class of system would be the interactive content products used to generate quotes and self-assessments. There are lots of these and it will be a big project to sort through them. We’ll also be constrained by cost: anything over $100 a month will be a stretch. If anybody reading this has suggestions, please send me an email.

In the meantime, I’ll continue working with CDP Institute Sponsors and others to refine the categories, use cases, and other components. Again, anyone who wants to help out is welcome to participate.

This is a big project. But it directly addresses several of the key challenges facing CDP users today. I look forward to moving ahead.

Labels:

cdp,

customer data,

martech,

rfp,

vendor selection

Tuesday, September 25, 2018

Salesforce Customer 360 Solution to Share Data Without a Shared Database

Salesforce has sipped the Kool-Aid: it led off the Dreamforce conference today with news of Customer 360, which aims to “help companies move beyond an app- or department-specific view of each customer by making it easier to create a single, holistic customer profile to inform every interaction”.

But they didn’t drink the whole glass. Customer 360 isn't assembling a persistent, unified customer database as described in the Customer Data Platform Institute's CDP definition. Instead, they are building connections to data that remains its original systems – and proud of it. As Senior Vice President of Product Management Patrick Stokes says in a supporting blog post, “People talk about a ‘single’ view of the customer, which implies all of this data is stored somewhere centrally, but that's not our philosophy. We believe strongly that a graph of data about your customer combined with a standard way for each of your applications to access that data, without dumping all the data into the same repository, is the right technical approach.”

Salesforce gets lots of points for clarity. Other things they make clear include:

I say they’re not. The premise of the Customer Data Platform approach is that customer data needs to be extracted into a shared central database. The fundamental reason is that having the data in one place makes it easier to access because you’re not querying multiple source systems and potentially doing additional real-time processing when data is needed. A central database can do all this work in advance, enabling faster and more consistent response, and place the data in structures that are most appropriate for particular business needs. Indeed, a CDP can maintain the same data in different structures to support different purposes. It also can retain data that might be lost in operational systems, which frequently overwrite information such as customer status or location. This information can be important to understand trends, behavior patterns, or past context needed to build effective predictions. On a simpler level, a persistent database can accept batch file inputs from source systems that don’t allow an API connection or – and this is very common – from systems whose managers won’t allow direct API connections for fear of performance issues.

Obviously these arguments are familiar to the people at Salesforce, so you have to ask why they chose to ignore them – or, perhaps better stated, what arguments they felt outweighed them. I have no inside information but suspect the fundamental reason Salesforce has chosen not to support a separate CDP-style repository is the data movement required to support that would be very expensive given the designs of their current products, creating performance issues and costs their clients wouldn't accept. It’s also worth noting that the examples Salesforce gives for using customer data mostly relate to real-time interactions such as phone agents looking up a customer record. In those situations, access to current data is essential: providing it through real-time replication would be extremely costly while reading it directly from source systems is quite simple. So if Salesforce feels real-time interactions are the primary use case for central customer data, it makes sense to take their approach and sacrifice the historical perspective and improved analytics that a separate database can provide.

It’s interesting to contrast Salesforce’s approach with yesterday’s Open Source Initiatve announcement from Adobe, Microsoft and SAP. That group has taken exactly the opposite tack, developing a plan to extract data from source systems and load it into an Azure database. This is a relatively new approach for Adobe, which until recently argued – as Salesforce still does – that creating a common ID and accessing data in place was enough. That they tried and abandoned this method suggests that they found it won’t meet customer needs. It could even be cited as evidence that Salesforce will eventually reach the same conclusion. But it’s also worth noting that Adobe’s announcement focused primarily on analytical uses of the unified customer data and their strongest marketing product is Web analytics. Conversely, Salesforce’s heritage is customer interactions in CRM and email. So it may be that each vendor has chosen the approach which best supports its core products.

(An intriguing alternative explanation, offered by TechCrunch, is that Adobe, Microsoft and SAP have created a repository specifically to make it easier for clients to extract their data from Salesforce. I’m not sure I buy this but the same logic would explain why Salesforce has chosen an approach that keeps the core data locked safely within existing Salesforce systems.)

I’ll state the obvious by pointing out that companies need both analytics and interactions. We already know that many CDPs can access data in place, most commonly applied to information such as location or weather which changes constantly and is only relevant when an interaction occurs. So a hybrid approach is already common (though not universal) in the CDP world. Salesforce does say

that “Customer 360 creates and stores a customer profile”, so some persistence is already built into the product. We don’t know how much data is kept in that profile and it might only be the identifiers needed for cross-system identity resolution. (That’s what Adobe stored persistently before it changed its approach.) You could view this as the seed of a hybrid solution already planted within Customer 360. But while it can probably be extended to some degree, it’s not the equivalent a CDP that is designed to store most data centrally.

My guess is that Salesforce will eventually decide, as Adobe has already, that a large central repository is necessary. Customer 360 builds connections that are needed to support such a repository, so it can be viewed as a step in that direction, whether or not that's the intent. Since a complete solution needs both central storage and direct access, we can view the challenge as finding the right balance between the two storage models, not picking one or the other exclusively. Finding a balance isn't as much fun as a having religious war over which is absolutely correct but it's ultimately the best solutions for marketers and other users.

And what does all this mean for the independent CDP market? Like Adobe yesterday, Salesforce is describing a product in its early stages – although the Salesforce approach is technically less challenging and closer to delivery. It will appeal primarily to companies that use the three Salesforce B2C systems, which I think is relatively small subset of the business world. Exactly how non-Salesforce systems are integrated through Mulesoft isn’t yet clear: in particular, I wonder how much identity resolution will be possible.

But I still feel the access-in-place approach solves only a part of the problem addressed by CDPs and not the most important part at that. We know from research and observation that the most common CDP use cases are analytics, not interactions: although coordinated omnichannel customer experience is everyone's ultimate goal, initial CDP projects usually focus on understanding customers and analyzing their behaviors over time. In particular, artificial intelligence relies heavily on comprehensive customer data sets that need to be assembled in a persistent data store outside of the source systems. Given the central role that AI is expected to play in the future, it’s hard to imagine marketers enthusiastically embracing a Salesforce solution that they recognize won't assemble AI training sets. They’re more likely to invest in one solution that meets both analytical (including AI) and interaction needs. For the moment, that puts them firmly back into CDP territory (including Datorama, which Salesforce bought in August).

The big question is how long this moment lasts. Salesforce and Adobe/Microsoft/SAP will all get lots of feedback from customers once they deploy their solutions. We can expect them to be fast learners and pragmatic enough to extend their architectures in whatever ways are needed to meet customer requirements. The threat of those vendors deploying truly competitive products has always hung over the CDP industry and is now more menacing than ever. There may even be some damage before those vendors deploy effective solutions, if they scare off investors and confuse buyers or just cause buyers to defer their decisions. CDP vendors and industry analysts, who are already struggling to help buyers understand the nuances of CDP features, will have an even harder job to explain the strengths and weaknesses of these new alternatives. But the biggest job belongs to the buyers themselves: they're the ones who will most suffer if they pick products that don't truly meet their needs.

But they didn’t drink the whole glass. Customer 360 isn't assembling a persistent, unified customer database as described in the Customer Data Platform Institute's CDP definition. Instead, they are building connections to data that remains its original systems – and proud of it. As Senior Vice President of Product Management Patrick Stokes says in a supporting blog post, “People talk about a ‘single’ view of the customer, which implies all of this data is stored somewhere centrally, but that's not our philosophy. We believe strongly that a graph of data about your customer combined with a standard way for each of your applications to access that data, without dumping all the data into the same repository, is the right technical approach.”

Salesforce gets lots of points for clarity. Other things they make clear include:

- Customer 360 is in a closed pilot release today with general availability in 2019. (Okay, saying when in 2019 might be helpful, but we’ll take it.)

- Customer 360 currently unifies Salesforce’s B2C products, including Marketing, Customer Service, and Commerce. (Elsewhere, Salesforce does make the apparently conflicting assertions that “For customers of Salesforce B2B products, all information is in one place, in a single data model for marketing, sales, B2B commerce and service” and “Many Salesforce implementations on the B2B side, especially those with multi-org deployments, could be improved with Customer 360.” Still, the immediate point is clear.)

- Customer 360 will include an identity resolution layer to apply a common customer ID to data in the different Salesforce systems. (We need details but presumably those will be forthcoming.)

- Customer 360 is only about combining data within Salesforce products, but can be extended to include API connections with other systems through Salesforce Mulesoft. (Again, we need details.)

- Customer 360 is designed to let Salesforce admins set up connections between the related systems: it's not an IT tool (no coding is needed) and it's not for end-users (only some prebuilt packages with particular data mappings are available).

I say they’re not. The premise of the Customer Data Platform approach is that customer data needs to be extracted into a shared central database. The fundamental reason is that having the data in one place makes it easier to access because you’re not querying multiple source systems and potentially doing additional real-time processing when data is needed. A central database can do all this work in advance, enabling faster and more consistent response, and place the data in structures that are most appropriate for particular business needs. Indeed, a CDP can maintain the same data in different structures to support different purposes. It also can retain data that might be lost in operational systems, which frequently overwrite information such as customer status or location. This information can be important to understand trends, behavior patterns, or past context needed to build effective predictions. On a simpler level, a persistent database can accept batch file inputs from source systems that don’t allow an API connection or – and this is very common – from systems whose managers won’t allow direct API connections for fear of performance issues.

Obviously these arguments are familiar to the people at Salesforce, so you have to ask why they chose to ignore them – or, perhaps better stated, what arguments they felt outweighed them. I have no inside information but suspect the fundamental reason Salesforce has chosen not to support a separate CDP-style repository is the data movement required to support that would be very expensive given the designs of their current products, creating performance issues and costs their clients wouldn't accept. It’s also worth noting that the examples Salesforce gives for using customer data mostly relate to real-time interactions such as phone agents looking up a customer record. In those situations, access to current data is essential: providing it through real-time replication would be extremely costly while reading it directly from source systems is quite simple. So if Salesforce feels real-time interactions are the primary use case for central customer data, it makes sense to take their approach and sacrifice the historical perspective and improved analytics that a separate database can provide.

It’s interesting to contrast Salesforce’s approach with yesterday’s Open Source Initiatve announcement from Adobe, Microsoft and SAP. That group has taken exactly the opposite tack, developing a plan to extract data from source systems and load it into an Azure database. This is a relatively new approach for Adobe, which until recently argued – as Salesforce still does – that creating a common ID and accessing data in place was enough. That they tried and abandoned this method suggests that they found it won’t meet customer needs. It could even be cited as evidence that Salesforce will eventually reach the same conclusion. But it’s also worth noting that Adobe’s announcement focused primarily on analytical uses of the unified customer data and their strongest marketing product is Web analytics. Conversely, Salesforce’s heritage is customer interactions in CRM and email. So it may be that each vendor has chosen the approach which best supports its core products.

(An intriguing alternative explanation, offered by TechCrunch, is that Adobe, Microsoft and SAP have created a repository specifically to make it easier for clients to extract their data from Salesforce. I’m not sure I buy this but the same logic would explain why Salesforce has chosen an approach that keeps the core data locked safely within existing Salesforce systems.)

I’ll state the obvious by pointing out that companies need both analytics and interactions. We already know that many CDPs can access data in place, most commonly applied to information such as location or weather which changes constantly and is only relevant when an interaction occurs. So a hybrid approach is already common (though not universal) in the CDP world. Salesforce does say

that “Customer 360 creates and stores a customer profile”, so some persistence is already built into the product. We don’t know how much data is kept in that profile and it might only be the identifiers needed for cross-system identity resolution. (That’s what Adobe stored persistently before it changed its approach.) You could view this as the seed of a hybrid solution already planted within Customer 360. But while it can probably be extended to some degree, it’s not the equivalent a CDP that is designed to store most data centrally.

My guess is that Salesforce will eventually decide, as Adobe has already, that a large central repository is necessary. Customer 360 builds connections that are needed to support such a repository, so it can be viewed as a step in that direction, whether or not that's the intent. Since a complete solution needs both central storage and direct access, we can view the challenge as finding the right balance between the two storage models, not picking one or the other exclusively. Finding a balance isn't as much fun as a having religious war over which is absolutely correct but it's ultimately the best solutions for marketers and other users.

And what does all this mean for the independent CDP market? Like Adobe yesterday, Salesforce is describing a product in its early stages – although the Salesforce approach is technically less challenging and closer to delivery. It will appeal primarily to companies that use the three Salesforce B2C systems, which I think is relatively small subset of the business world. Exactly how non-Salesforce systems are integrated through Mulesoft isn’t yet clear: in particular, I wonder how much identity resolution will be possible.

But I still feel the access-in-place approach solves only a part of the problem addressed by CDPs and not the most important part at that. We know from research and observation that the most common CDP use cases are analytics, not interactions: although coordinated omnichannel customer experience is everyone's ultimate goal, initial CDP projects usually focus on understanding customers and analyzing their behaviors over time. In particular, artificial intelligence relies heavily on comprehensive customer data sets that need to be assembled in a persistent data store outside of the source systems. Given the central role that AI is expected to play in the future, it’s hard to imagine marketers enthusiastically embracing a Salesforce solution that they recognize won't assemble AI training sets. They’re more likely to invest in one solution that meets both analytical (including AI) and interaction needs. For the moment, that puts them firmly back into CDP territory (including Datorama, which Salesforce bought in August).

The big question is how long this moment lasts. Salesforce and Adobe/Microsoft/SAP will all get lots of feedback from customers once they deploy their solutions. We can expect them to be fast learners and pragmatic enough to extend their architectures in whatever ways are needed to meet customer requirements. The threat of those vendors deploying truly competitive products has always hung over the CDP industry and is now more menacing than ever. There may even be some damage before those vendors deploy effective solutions, if they scare off investors and confuse buyers or just cause buyers to defer their decisions. CDP vendors and industry analysts, who are already struggling to help buyers understand the nuances of CDP features, will have an even harder job to explain the strengths and weaknesses of these new alternatives. But the biggest job belongs to the buyers themselves: they're the ones who will most suffer if they pick products that don't truly meet their needs.

Monday, September 24, 2018

Adobe, Microsoft and SAP Announce Open Data Initiative: It's CDP Turf But No Immediate Threat

The reason the shared Microsoft project was on Adobe managers’ minds became clear today when Adobe, Microsoft and SAP announced an “Open Data Initiative” that seemed pretty much the same news as before – open source data models (for customers and other objects) feeding a system hosted on Azure. The only thing really seemed new was SAP’s involvement. And, as became clear during analyst questions after the announcement at Microsoft’s Ignite conference, this is all in very early stages of planning.

I’ll admit to some pleasure that these firms have finally admitted the need for unified customer data, a topic close to my heart. Their approach – creating a persistent, standardized repository – is very much the one I’ve been advocating under the Customer Data Platform label. I’ll also admit to some initial fear that a solution from these vendors will reduce the need for stand-alone CDP systems. After all, stand-alone CDP vendors exist because enterprise software companies including Microsoft, Adobe and SAP have left a major need unfilled.

But in reviewing the published materials and listening to the vendors, it’s clear that their project is in very early stages. What they said on the analyst call is that engineering teams have just started to work on reconciling their separate data models – which is heart of the matter. They didn’t put a time frame on the task but I suspect we’re talking more than a year to get anything even remotely complete. Nor, although the vendors indicated this is a high strategic priority, would I be surprised if they eventually fail to produce something workable. That could mean they produce something, but it’s so complicated and exception-riddled that it doesn’t meet the fundamental goal of creating truly standardized data.

Why I think this could happen is that enterprise-level customer data is very complicated. Each of these vendors has multiple systems with data models that are highly tuned to specific purposes and are still typically customized or supplemented with custom objects during implementation. It’s easy to decide there’s an entity called “customer” but hard to agree on one definition that will apply across all channels and back-office processes. In practice, different systems have different definitions that suit their particular needs.

Reconciling these is the main challenge in any data integration project. Within a single company, the solution involves detailed, technical discussions among the managers of different systems. Trying to find a general solution that applies across hundreds of enterprises may well be impossible. In practice, you’re likely to end up with data models that support different definitions in different circumstances with some mechanism to specify which definition is being used in each situation. That may be so confusing that it defeats the purpose of having shared data, which is for different people to easily make use of it.

Note that CDPs are deployed at the company level, so they don’t need to solve the multi-company problem.*** This is one reason I suspect the Adobe/Microsoft/SAP project doesn’t pose much of a threat to the current CDP vendors, at least so long as buyers actually look at the details rather than just assuming the big companies have solved the problem because they’ve announced they're working on it.

The other interesting aspect of the joint announcement was its IT- rather than marketing-centric focus. All three of the supporting quotes in the press release came from CIOs, which tells you who the vendors see as partners. Nothing wrong with that: one of trends I see in the CDP market is a separation between CDPs that focus primarily on data management (and enterprise-wide use cases and IT departments as primary users) and those that incorporate marketing applications (and marketing use cases and marketers as users). As you may recall, we recently changed the CDP Institute definition of CDP from “marketer-controlled” to “packaged software” to reflect the use of customer data across the enterprise. But most growth in the CDP industry is coming from the marketing-oriented systems. The Open Data Initiative may eventually make life harder for the enterprise-oriented CDPs, although I’m sure they would argue it will help by bringing attention to a problem that it doesn’t really solve, opening the way to sales of their products. It’s even less likely to impact sales of the marketing-oriented CDPs, which are bought by marketing departments who want tightly integrated marketing applications.

Another indication of the mindset underlying the Open Data Initiative is this more detailed discussion of their approach, from Adobe’s VP of Platform Engineering. Here the discussion is mostly about making the data available for analysis. The exact quote “to give data scientists the speed and flexibility they need to deliver personalized experiences” will annoy marketers everywhere, who know that data scientists are not responsible for experience design, let alone delivery. Although the same post does mention supporting real-time customer experiences, it’s pretty clear from context that the core data repository is a data lake to be used for analysis, not a database to be accessed directly during real-time interactions. Again, nothing wrong with that and not all CDPs are designed for real-time interactions, either. But many are and the capability is essential for many marketing use cases.

In sum: today’s announcement is important as a sign that enterprise software vendors are (finally) recognizing that their clients need unified customer data. But it’s early days for the initiative, which may not deliver on its promises and may not promise what marketers actually want or need. It will no doubt add more confusion to an already confused customer data management landscape. But smart marketers and IT departments will emerge from the confusion with a sound understanding of their requirements and systems that meet them. So it's clearly a step in the right direction.

__________________________________

*I didn't bother to comment the Marketo acquisition in detail because, let’s face it, the world didn’t need one more analysis. But now that I’ve had a few days to reflect, I really think it was a bad idea. Not because Marketo is a bad product or it doesn’t fill a big gap in the Adobe product line (B2B marketing automation). It's because filling that gap won’t do Adobe much good. Their creative and Web analysis products already gave them a presence in every marketing department worth considering, so Marketo won’t open many new doors. And without a CRM product to sell against Salesforce, Adobe still won’t be able to position itself as a Salesforce replacement. So all they bought for $4.75 billion was the privilege of selling a marginally profitable product to their existing customers. Still worse, that product is in a highly competitive space where growth has slowed and the old marketing automation approach (complex, segment-based multi-step campaign flows) may soon be obsolete. If Adobe thinks they’ll use Marketo to penetrate small and mid-size accounts, they are ignoring how price-sensitive, quality-insensitive, support-intensive, and change-resistant those buyers are. And if they think they’ll sell a lot of add-on products to Marketo customers, I’d love to know what those would be.

** I wish Microsoft would just buy Adobe already. They’re like a couple that’s been together for years and had kids but refuses to get married.

*** Being packaged software, CDPs let users implement solutions via configuration rather than custom development. This is why they’re more efficient than custom-built data warehouses or data lakes for company-level projects.

Thursday, September 06, 2018

Customer Data Platforms vs Master Data Management: How They Differ

Master Data Management can be loosely defined as maintaining and distributing consistent information about core business entities such as people, products, and locations. (Start here

if you’d like to explore more formal definitions.) Since customers are one of the most important core entities, it clearly overlaps with CDP.

Specifically, MDM and CDP both require identity resolution (linking all identifiers that apply to a particular individual), which enables CDPs to bring together customer data into a comprehensive unified profile. In fact some CDPs rely on MDM products to perform this function.

MDM and (some) CDP systems also create a “golden record” containing the business’s best guess at customer attributes such as name and address. That’s the “master” part of MDM. It often requires choosing between conflicting information captured by different systems or by the same system at different times. CDP and MDM both share that golden record with other systems to ensure consistency.